该医学影像NLP比赛要求根据CT影像描述生成诊断报告,数据经脱敏处理,初赛用2万训练样本,复赛增至8万并加入临床信息。文中介绍了基于PEGASUS模型的实现过程,包括数据探索、模型加载与参数设置、数据处理、训练(含FGM对抗训练)、评估(用CiderD指标)及预测等步骤,以优化报告生成效果。

☞☞☞AI 智能聊天, 问答助手, AI 智能搜索, 免费无限量使用 DeepSeek R1 模型☜☜☜

1. 赛题背景

医学影像(如 CT 影像、核磁共振影像)是病情诊断的重要依据,通过医学影像得出诊断报告是针对过程中的重要步骤,也是医疗 AI 研究的前沿热点。本赛道任务要求参赛队伍根据医生对 CT 的影像描述文本数据(即对医学影像特征的描述),生成诊断报告文本。

与传统文本生成任务不同的是,医学影像诊断报告内容具有专业性、明确性和离散性,因此也需要针对性的算法与模型设计。报告生成结果按照指定评价指标(见提交&评审介绍)进行评测和排名,得分最优者获胜。

本数据为医生对若干CT的影像描述的明文数据,以及对应的诊断报告的明文数据,样本量为1份,以便使参赛队伍对比赛数据有直观的了解(Sample数据只是为了增进参赛选手对影像描述和诊断报告的直观了解,实际训练与测试数据不一定与Sample数据具有相同特征或分布)。复赛时额外新增临床信息作为辅助建模信息。

| 列名 | 类型 | 示例 |

|---|---|---|

| report_ID | int | 1 |

| description(输入) | string,影像描述 | 左侧顶骨局部骨质缺如;两侧侧脑室旁见点状密度减低。右侧额部颅板下见弧形脑脊液密度影。脑室系统扩大,脑沟、裂、池增宽。中线结构无移位。双侧乳突气化差,内见密度增高。 |

| diagnosis(输出) | string,诊断报告 | 左侧顶骨局部缺如,考虑术后改变;脑内散发缺血灶;右侧额部少量硬膜下积液;双侧乳突炎。 |

| clinical(输入,复赛加入) | string,临床信息 | 左侧硬膜下血肿术后 |

脱敏后的影像描述与对应影像报告。文本以字为单位脱敏,使用空格分割。Training 数据用于参赛选手的模型训练与预估。初赛仅使用影像描述生成诊断报告;复赛额外加入临床信息,提升建模多样性。其中:

初赛 Training 集规模为 20000 例样本; 复赛 Training 集规模为 80000 例样本。 Training 数据格式(不同列使用分隔符“,”分割):

| 列名 | 类型 | 示例 |

|---|---|---|

| report_ID | int | 1 |

| description | 脱敏后的影像描述,以字为单位使用空格分割 | 101 47 12 66 74 90 0 411 234 79 175 |

| diagnosis | 脱敏后的诊断报告,以字为单位使用空格分割 | 122 83 65 74 2 232 18 44 95 |

| clinical(复赛加入) | 脱敏后的临床信息,以字为单位使用空格分割 | 88 29 17 55 72 |

脱敏后的影像描述和临床信息(复赛),脱敏方法和 Training 相同。Test 数据用于参赛选手的模型评估和排名。其中:

初赛 Test 集分为 A/B 榜,规模均为 3000; 复赛 Test 集分为 A/B 榜,规模均为 7500。 Test 数据格式(不同列使用分隔符“,”分割):

| 列名 | 类型 | 示例 |

|---|---|---|

| report_ID | int | 1 |

| description | 脱敏后的影像描述,以字为单位使用空格分割 | 101 47 12 66 74 90 0 411 234 79 175 |

| clinical(复赛加入) | 脱敏后的临床信息,以字为单位使用空格分割 | 88 29 17 55 72 |

| 材料名称 | 使用链接 |

|---|---|

| 初赛Training集 | 点击下载https://www.heywhale.com/mw-org/gaiic2023/dataset/6412e32536218140148faccb |

| 初赛A榜Test集 | 点击下载https://www.heywhale.com/mw-org/gaiic2023/dataset/6412e32536218140148faccb |

| 初赛B榜Test集 | 待公布 |

| 复赛Training集 | 待公布 |

| 复赛A榜Test集 | 不开放给选手,仅在模型推理过程中,由系统提供路径进行推理。 |

| 复赛B榜Test集 | 不开放给选手,仅在模型推理过程中,由系统提供路径进行推理。 |

| baseline | 点击下载https://www.heywhale.com/u/775890 |

比赛经验分享 本赛的NLP赛题是根据一段影像描述生成一段诊断报告,和机器翻译、文本摘要等一样,都属于seq2seq的任务。官方的baseline里面是手工搭建了一个包含encoder和decoder的transformer模型,线上分数1.8左右。而我们如果能用已经训练好的预训练模型,例如Bart,T5,PEGASUS等,线上效果则会有提升。这个baseline大概分数在2.65左右,期待有大佬能公布3.0以上的topline。

同时其余提分技巧可以参考周周星经验分享,主要总结如下:

预训练,脱敏后的数据属于一种全新的语言,所以可以通过模型的预训练来让模型熟悉这个数据。原理可以参考周周星分享https://www.heywhale.com/org/gaiic2023/competition/forum/64266c731973e8997818034b ,我编写的PEGASUS的mlm预训练任务代码详见mlm-pretrain文件。需要预训练先运行mlm-pretrain文件,生成pre文件夹然后加载模型的时候加载上。

微调参数,主要包括

基础参数

优化器参数(AdamW)以及warmup和衰减策略

预测参数(model.generate):

3.增加trick:伪标签,FGM,EMA,SWA,数据增强,混合精度训练等。(可能有时候会有用,但不是每次都有用)

参考资料:

本次比赛的周周星经验分享:https://www.heywhale.com/org/gaiic2023/competition/forumlist/63fef766b4422ee27402289d

【自然语言处理】【文本生成】使用Transformers中的BART进行文本摘要: https://blog.csdn.net/bqw18744018044/article/details/127181072

paddle实现PEGASUS,中文文本摘要,用这个就够了:https://aistudio.baidu.com/aistudio/projectdetail/4903667?channelType=0&channel=0

paddle实现FGM对抗训练:https://aistudio.baidu.com/aistudio/projectdetail/4327353?channelType=0&channel=0

paddle实现EMA平均:https://aistudio.baidu.com/aistudio/projectdetail/1840154?channelType=0&channel=0

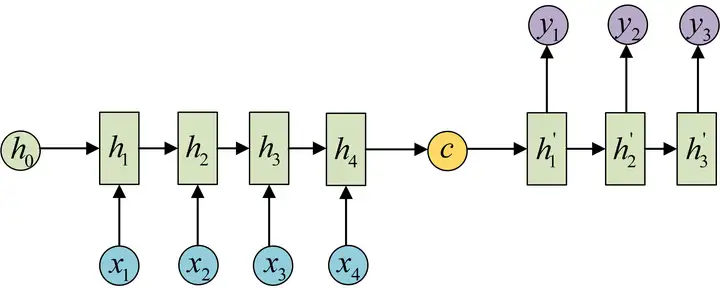

seq2seq任务的输入一个长度为N的字符串,输出一个长度为M的字符串,N->M。常用来处理机器翻译、文本摘要生成等任务。可以使用lstm结构,基本的rnn结构,不过目前最流行的是encoder-decoder结构,也被称作seq2seq模型。简单的encoder-decoder结构如下图,左边是输入和encoder,右边是输出和decoder:

也可以把encoder层的输出作为decoder每一步的输入,如下图:

同时,一般seq2seq任务还经常采用以下的训练和推理方式:

欢迎 来到 北京 -> welcome

欢迎 来到 北京 welcome -> to

欢迎 来到 北京 welcome to -> beijing

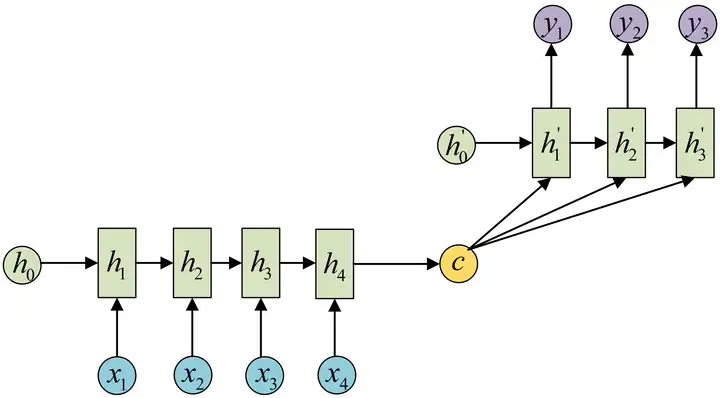

而2015年Bahdanau等人提出的transformer在encoder-decoder结构中也加入了attention结构,也就是给每个词语赋予了不同的权重表示重点。之后各家公司也根据不同的预训练任务,不同的网络结构等训练出各种预训练大模型,这些预训练模型基本都可以直接拿来微调使用。常用的seq2seq任务(机器翻译、文本摘要等)预训练模型:bart,T5,PEGASUS等。

但是本次比赛使用的是脱敏后的数据,相当于全新的语言,所以如果能够在模型微调前进行一个预训练,效果肯定会更好。常见的预训练任务有MLM,DAE等,常见的预训练任务及对应模型总结见知乎的论文笔记:https://zhuanlan.zhihu.com/p/139015428 主要预训练任务总结如下图:

欢迎 来到 北京 -> welcome

欢迎 来到 北京 welcome -> to

欢迎 来到 北京 welcome to -> beijing

欢迎 来到 [MASK] welcome to beijing -> 北京

欢迎 来到 北京 [MASK] to beijing -> welcome

[MASK] 来到 北京 welcome to beijing -> 欢迎

欢迎 来到 北京 [MASK] -> welcome

欢迎 来到 北京 [MASK] to -> welcome

欢迎 来到 [MASK] to -> welcome

欢迎 来到 北京 welcome to beijing -> 翻译对

欢迎 来到 北京 welcome to shanghai -> 不对

欢迎 来到 北京 go to beijing -> 不对

PEGASUS使用的预训练任务是:

PEGASUS详情请见论文 PEGASUS: Pre-training with Extracted Gap-sentences for Abstractive Summarization: https://arxiv.org/abs/1912.08777

import pandas as pd

test = pd.read_csv('data/data201684/preliminary_a_test.csv',header=None,names=['yingxiang'])

train = pd.read_csv('data/data201684/train.csv',header=None,names=['yingxiang', 'zhenduan'])print('train:',train.shape)print('test:',test.shape)train: (20000, 2) test: (3000, 1)

train.head(5)

yingxiang \

0 14 108 28 30 15 13 294 29 20 18 23 21 25 32 16...

1 22 12 1137 41 17 16 96 17 16 34 48 17 30 40 13...

2 14 108 333 30 15 13 31 29 20 829 891 21 25 11 ...

3 22 12 135 269 205 24 267 27 12 376 32 94 109 2...

4 34 12 48 63 109 28 30 40 13 1038 52 43 23 21 5...

zhenduan

0 22 12 38 41 17 81 10

1 66 75 80 116 17 81 16 33 81 16 33 24 122 370 1...

2 35 48 49 150 167 308 282 10

3 14 49 123 55 86 57 54 40 138 124 26 105 133 13...

4 34 12 48 1064 86 57 54 138 10 22 12 38 41 17 8...test.head(5)

yingxiang 0 22 12 74 71 64 56 16 248 14 40 13 83 52 43 44 ... 1 22 12 48 63 16 135 24 267 13 66 146 112 43 23 ... 2 22 12 74 71 64 11 279 288 285 56 40 13 123 55 ... 3 22 12 48 85 63 16 22 12 12 14 32 94 109 28 40 ... 4 34 12 935 1136 13 52 247 153 44 23 1006 25 11 ...

test['len'] = test['yingxiang'].apply(lambda x:len(x.split())) train['len'] = train['yingxiang'].apply(lambda x:len(x.split())) train['len2'] = train['zhenduan'].apply(lambda x:len(x.split()))

影像描述的句子长度分布,可以看到train和test数据集分布差不多

train['len'].hist(),test['len'].hist()

(<matplotlib.axes._subplots.AxesSubplot at 0x7f94f06a3cd0>, <matplotlib.axes._subplots.AxesSubplot at 0x7f94f06a3cd0>)

影像描述的句子最长148个单词,最短9个单词

train['len'].describe()

count 20000.000000 mean 81.201050 std 24.815447 min 9.000000 25% 62.000000 50% 76.000000 75% 97.000000 max 148.000000 Name: len, dtype: float64

test['len'].describe()

count 3000.000000 mean 81.072667 std 24.596539 min 10.000000 25% 63.000000 50% 76.000000 75% 97.000000 max 146.000000 Name: len, dtype: float64

诊断报告的句子长度分布

train['len2'].hist()

<matplotlib.axes._subplots.AxesSubplot at 0x7f94f07f1450>

<Figure size 640x480 with 1 Axes>

诊断报告的句子最长79个单词,最短2个单词

train['len2'].describe()

count 20000.000000 mean 25.336800 std 13.013068 min 2.000000 25% 16.000000 50% 23.000000 75% 32.000000 max 79.000000 Name: len2, dtype: float64

影像报告的词语从9开始到1298,前面的几个数字出现次数较多,train和test差不多。

from collections import Counter

l = train['yingxiang'].apply(lambda x:x.split()).tolist()

l = [i for j in l for i in j]

c = Counter(l)

df = pd.DataFrame({'key':list(c.keys()),'value':list(c.values())})

df['key'] = df['key'].astype('int')

df.sort_values('key')key value 1221 9 38 27 10 67536 21 11 63318 34 12 53630 5 13 60406 ... ... ... 545 1295 74 1165 1296 88 786 1297 68 1225 1298 63 519 1299 76 [1291 rows x 2 columns]

from collections import Counter

l = test['yingxiang'].apply(lambda x:x.split()).tolist()

l = [i for j in l for i in j]

c = Counter(l)

df = pd.DataFrame({'key':list(c.keys()),'value':list(c.values())})

df['key'] = df['key'].astype('int')

df.sort_values('key')key value 908 9 9 23 10 10118 18 11 9405 1 12 8066 10 13 9184 ... ... ... 149 1295 14 471 1296 9 1210 1297 7 973 1298 10 1155 1299 7 [1291 rows x 2 columns]

诊断描述的词语从9开始到1298,前面的几个数字出现次数较多,和影像描述也差不多。

from collections import Counter

l = train['zhenduan'].apply(lambda x:x.split()).tolist()

l = [i for j in l for i in j]

c = Counter(l)

df = pd.DataFrame({'key':list(c.keys()),'value':list(c.values())})

df['key'] = df['key'].astype('int')

df.sort_values('key')key value 1002 9 27 6 10 28945 61 11 15307 1 12 18788 97 13 3455 ... ... ... 1022 1295 12 1005 1296 18 1203 1297 17 1280 1298 18 1218 1299 24 [1291 rows x 2 columns]

定义相关参数

import timeimport osimport numpy as npfrom tqdm import tqdmfrom functools import partialimport pandas as pd# 官方baseline中的评分标准CiderDfrom eval import CiderDfrom visualdl import LogWriterfrom datasets import load_datasetfrom paddlenlp.transformers import AutoTokenizer,AutoModelForConditionalGeneration,LinearDecayWithWarmupfrom paddlenlp.utils.log import loggerfrom paddlenlp.data import DataCollatorForSeq2Seqimport paddlefrom paddle.io import BatchSampler, DistributedBatchSampler, DataLoaderfrom paddle import nn# 开始字符bos_token_id = 1# 结束字符eos_token_id = 2# 补全字符pad_token_id = 0# 训练模型的保存路径save_dir = 'checkpoints2'# 最高分数的记录best_score = 0# 影像描述的最大长度max_source_length = 160# 诊断报告的最大长度max_target_length = 90# 诊断报告的最小长度min_target_length = 0# 训练轮次num_epochs = 5# 训练中,每个log_steps打印一次日志log_steps = 50# 训练中,每隔eval_steps进行一次模型评估eval_steps = 300# 设置batch_sizetrain_batch_size = 16 dev_batch_size = 64test_batch_size = 64

[2023-04-27 22:25:54,586] [ WARNING] - Detected that datasets module was imported before paddlenlp. This may cause PaddleNLP datasets to be unavalible in intranet. Please import paddlenlp before datasets module to avoid download issues

# 定义句子还原函数def array2str(arr):

out = ''

for i in range(len(arr)): # 遇到结束标记就停止

if arr[i]==eos_token_id or arr[i]==pad_token_id: break

# 遇到开始标记就继续

if arr[i]==bos_token_id: continue

out += str(int(arr[i])) + ' '

if len(out.strip())==0:

out = '0'

return out.strip()

array2str([1,10,11,12,13,2,0,0,0,0,0,0,0])'10 11 12 13'

# 定义损失函数def CE(output, target):

'''

Output: (B,L,C)。未经过softmax的logits

Target: (B,L)

'''

# print(target)

# reshape 不同

output = output.reshape((-1, output.shape[-1])) # (*,C)

target = target.reshape((-1,))#.long() # (*)

return nn.CrossEntropyLoss()(output, target) #默认size_average=True,会把B*L所有词loss平均# 加载模型和tokenizer,batchify_fmodel_name = 'IDEA-CCNL/Randeng-Pegasus-238M-Summary-Chinese'tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForConditionalGeneration.from_pretrained(model_name, # 如果有预训练,换成预训练保存的文件夹名称 ‘pre’

vocab_size=1500, # 因为词表和原来不同,所以需要修改模型的token数量

bos_token_id = bos_token_id,# 重新定义

eos_token_id = eos_token_id,# 重新定义

decoder_start_token_id = eos_token_id,# 重新定义

forced_eos_token_id = eos_token_id,# 重新定义

pad_token_id = pad_token_id)

batchify_fn = DataCollatorForSeq2Seq(tokenizer=tokenizer, model=model)[2023-04-27 22:25:57,444] [ INFO] - Downloading tokenizer_config.json from https://bj.bcebos.com/paddlenlp/models/community/IDEA-CCNL/Randeng-Pegasus-238M-Summary-Chinese/tokenizer_config.json 100%|██████████| 2.00/2.00 [00:00<00:00, 1.38kB/s]

We use pattern recognition to recognize the Tokenizer class.

[2023-04-27 22:25:57,570] [ INFO] - We are using <class 'paddlenlp.transformers.pegasus.tokenizer.PegasusChineseTokenizer'> to load 'IDEA-CCNL/Randeng-Pegasus-238M-Summary-Chinese'. [2023-04-27 22:25:57,573] [ INFO] - Downloading https://bj.bcebos.com/paddlenlp/models/community/IDEA-CCNL/Randeng-Pegasus-238M-Summary-Chinese/vocab.txt and saved to /home/aistudio/.paddlenlp/models/IDEA-CCNL/Randeng-Pegasus-238M-Summary-Chinese [2023-04-27 22:25:57,576] [ INFO] - Downloading vocab.txt from https://bj.bcebos.com/paddlenlp/models/community/IDEA-CCNL/Randeng-Pegasus-238M-Summary-Chinese/vocab.txt 100%|██████████| 365k/365k [00:00<00:00, 1.02MB/s] [2023-04-27 22:25:58,268] [ INFO] - Downloading https://bj.bcebos.com/paddlenlp/models/community/IDEA-CCNL/Randeng-Pegasus-238M-Summary-Chinese/added_tokens.json and saved to /home/aistudio/.paddlenlp/models/IDEA-CCNL/Randeng-Pegasus-238M-Summary-Chinese [2023-04-27 22:25:58,271] [ INFO] - Downloading added_tokens.json from https://bj.bcebos.com/paddlenlp/models/community/IDEA-CCNL/Randeng-Pegasus-238M-Summary-Chinese/added_tokens.json 100%|██████████| 2.00/2.00 [00:00<00:00, 1.11kB/s] [2023-04-27 22:25:58,371] [ INFO] - Downloading https://bj.bcebos.com/paddlenlp/models/community/IDEA-CCNL/Randeng-Pegasus-238M-Summary-Chinese/special_tokens_map.json and saved to /home/aistudio/.paddlenlp/models/IDEA-CCNL/Randeng-Pegasus-238M-Summary-Chinese [2023-04-27 22:25:58,374] [ INFO] - Downloading special_tokens_map.json from https://bj.bcebos.com/paddlenlp/models/community/IDEA-CCNL/Randeng-Pegasus-238M-Summary-Chinese/special_tokens_map.json 100%|██████████| 65.0/65.0 [00:00<00:00, 60.4kB/s] [2023-04-27 22:25:58,514] [ INFO] - Already cached /home/aistudio/.paddlenlp/models/IDEA-CCNL/Randeng-Pegasus-238M-Summary-Chinese/tokenizer_config.json [2023-04-27 22:25:58,572] [ INFO] - Downloading model_config.json from https://bj.bcebos.com/paddlenlp/models/community/IDEA-CCNL/Randeng-Pegasus-238M-Summary-Chinese/model_config.json 100%|██████████| 731/731 [00:00<00:00, 455kB/s] [2023-04-27 22:25:58,738] [ INFO] - We are using <class 'paddlenlp.transformers.pegasus.modeling.PegasusForConditionalGeneration'> to load 'IDEA-CCNL/Randeng-Pegasus-238M-Summary-Chinese'. [2023-04-27 22:25:58,740] [ INFO] - Downloading https://bj.bcebos.com/paddlenlp/models/community/IDEA-CCNL/Randeng-Pegasus-238M-Summary-Chinese/model_state.pdparams and saved to /home/aistudio/.paddlenlp/models/IDEA-CCNL/Randeng-Pegasus-238M-Summary-Chinese [2023-04-27 22:25:58,742] [ INFO] - Downloading model_state.pdparams from https://bj.bcebos.com/paddlenlp/models/community/IDEA-CCNL/Randeng-Pegasus-238M-Summary-Chinese/model_state.pdparams 100%|██████████| 675M/675M [00:52<00:00, 13.3MB/s] [2023-04-27 22:26:51,878] [ INFO] - Already cached /home/aistudio/.paddlenlp/models/IDEA-CCNL/Randeng-Pegasus-238M-Summary-Chinese/model_config.json W0427 22:26:51.884132 188 gpu_resources.cc:61] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 11.2, Runtime API Version: 11.2 W0427 22:26:51.888605 188 gpu_resources.cc:91] device: 0, cuDNN Version: 8.2.

训练集总共2万条,按9:1的比例,直接取训练集中后2000条作为验证集,提交几次后发现验证集上的效果和线上效果基本一致。

test = pd.read_csv('data/data201684/preliminary_a_test.csv',header=None)

test[2] = '0 0'test[[1,2]].to_csv('test.csv',header = None,index=False)

train = pd.read_csv('data/data201684/train.csv',header=None)

train[[1,2]].head(18000).to_csv('train.csv',header = None,index=False)

train[[1,2]].tail(2000).to_csv('valid.csv',header = None,index=False)

train_dataset = load_dataset("csv", data_files='train.csv',names=['yingxiang', 'zhenduan'], split="train")

dev_dataset = load_dataset("csv", data_files='valid.csv',names=['yingxiang', 'zhenduan'], split="train")

test_dataset = load_dataset("csv", data_files='test.csv',names=['yingxiang', 'zhenduan'], split="train")Using custom data configuration default-17b80d32585c117e

Downloading and preparing dataset csv/default to /home/aistudio/.cache/huggingface/datasets/csv/default-17b80d32585c117e/0.0.0/6b34fb8fcf56f7c8ba51dc895bfa2bfbe43546f190a60fcf74bb5e8afdcc2317...

Downloading data files: 0%| | 0/1 [00:00<?, ?it/s]

Extracting data files: 0%| | 0/1 [00:00<?, ?it/s]

Generating train split: 0 examples [00:00, ? examples/s]

Dataset csv downloaded and prepared to /home/aistudio/.cache/huggingface/datasets/csv/default-17b80d32585c117e/0.0.0/6b34fb8fcf56f7c8ba51dc895bfa2bfbe43546f190a60fcf74bb5e8afdcc2317. Subsequent calls will reuse this data.

Using custom data configuration default-762584762b1087a2

Downloading and preparing dataset csv/default to /home/aistudio/.cache/huggingface/datasets/csv/default-762584762b1087a2/0.0.0/6b34fb8fcf56f7c8ba51dc895bfa2bfbe43546f190a60fcf74bb5e8afdcc2317...

Downloading data files: 0%| | 0/1 [00:00<?, ?it/s]

Extracting data files: 0%| | 0/1 [00:00<?, ?it/s]

Generating train split: 0 examples [00:00, ? examples/s]

Dataset csv downloaded and prepared to /home/aistudio/.cache/huggingface/datasets/csv/default-762584762b1087a2/0.0.0/6b34fb8fcf56f7c8ba51dc895bfa2bfbe43546f190a60fcf74bb5e8afdcc2317. Subsequent calls will reuse this data.

Using custom data configuration default-bd109b044935a003

Downloading and preparing dataset csv/default to /home/aistudio/.cache/huggingface/datasets/csv/default-bd109b044935a003/0.0.0/6b34fb8fcf56f7c8ba51dc895bfa2bfbe43546f190a60fcf74bb5e8afdcc2317...

Downloading data files: 0%| | 0/1 [00:00<?, ?it/s]

Extracting data files: 0%| | 0/1 [00:00<?, ?it/s]

Generating train split: 0 examples [00:00, ? examples/s]

Dataset csv downloaded and prepared to /home/aistudio/.cache/huggingface/datasets/csv/default-bd109b044935a003/0.0.0/6b34fb8fcf56f7c8ba51dc895bfa2bfbe43546f190a60fcf74bb5e8afdcc2317. Subsequent calls will reuse this data.

# 处理数据的函数,因为本次直接是脱敏数据成数字,所以就不需要tokenizer再做处理了,直接拼接开始和结束符号作为输入def convert_example(example, text_column, summary_column):

"""

构造模型的输入

"""

inputs = example[text_column].split()

inputs = [bos_token_id]+[int(i) for i in inputs]

inputs.append(eos_token_id)

targets = example[summary_column].split()

targets = [bos_token_id]+[int(i) for i in targets]

targets.append(eos_token_id)

model_inputs = {}

model_inputs["input_ids"] = inputs

model_inputs["attention_mask"] = [1]*len(inputs)

model_inputs["labels"] = targets return model_inputs# 原始字段需要移除remove_columns = ['yingxiang', 'zhenduan']# 定义转换器trans_func = partial(convert_example,

text_column='yingxiang',

summary_column='zhenduan')

# train_dataset和dev_dataset分别转换train_dataset = train_dataset.map(trans_func,batched=False,# 对每条数据逐个处理

load_from_cache_file=True,remove_columns=remove_columns)

dev_dataset = dev_dataset.map(trans_func,batched=False,

load_from_cache_file=True,remove_columns=remove_columns)

test_dataset = test_dataset.map(trans_func,batched=False,

load_from_cache_file=True,remove_columns=remove_columns)0%| | 0/18000 [00:00<?, ?ex/s]

0%| | 0/2000 [00:00<?, ?ex/s]

0%| | 0/3000 [00:00<?, ?ex/s]

# 分布式批采样器,用于多卡分布式训练train_batch_sampler = DistributedBatchSampler(train_dataset, batch_size=train_batch_size, shuffle=True)# 构造训练训练集Dataloadertrain_data_loader = DataLoader(dataset=train_dataset,batch_sampler=train_batch_sampler,

num_workers=0,collate_fn=batchify_fn,return_list=True)

dev_batch_sampler = BatchSampler(dev_dataset,batch_size=dev_batch_size,shuffle=False)

dev_data_loader = DataLoader(dataset=dev_dataset,batch_sampler=dev_batch_sampler,

num_workers=0,collate_fn=batchify_fn,return_list=True)

test_batch_sampler = BatchSampler(test_dataset,batch_size=test_batch_size,shuffle=False)

test_data_loader = DataLoader(dataset=test_dataset,batch_sampler=test_batch_sampler,

num_workers=0,collate_fn=batchify_fn,return_list=True)for idx, example in enumerate(dev_data_loader): print(example) break{'input_ids': Tensor(shape=[64, 134], dtype=int64, place=Place(gpu:0), stop_gradient=True,

[[1 , 185, 185, ..., 0 , 0 , 0 ],

[1 , 14 , 281, ..., 0 , 0 , 0 ],

[1 , 34 , 12 , ..., 0 , 0 , 0 ],

...,

[1 , 22 , 12 , ..., 0 , 0 , 0 ],

[1 , 12 , 62 , ..., 0 , 0 , 0 ],

[1 , 22 , 12 , ..., 0 , 0 , 0 ]]), 'attention_mask': Tensor(shape=[64, 134], dtype=int64, place=Place(gpu:0), stop_gradient=True,

[[1, 1, 1, ..., 0, 0, 0],

[1, 1, 1, ..., 0, 0, 0],

[1, 1, 1, ..., 0, 0, 0],

...,

[1, 1, 1, ..., 0, 0, 0],

[1, 1, 1, ..., 0, 0, 0],

[1, 1, 1, ..., 0, 0, 0]]), 'labels': Tensor(shape=[64, 69], dtype=int64, place=Place(gpu:0), stop_gradient=True,

[[ 1 , 22 , 12 , ..., -100, -100, -100],

[ 1 , 34 , 12 , ..., -100, -100, -100],

[ 1 , 34 , 12 , ..., 282, 10 , 2 ],

...,

[ 1 , 14 , 30 , ..., -100, -100, -100],

[ 1 , 66 , 19 , ..., -100, -100, -100],

[ 1 , 75 , 80 , ..., -100, -100, -100]]), 'decoder_input_ids': Tensor(shape=[64, 69], dtype=int64, place=Place(gpu:0), stop_gradient=True,

[[2 , 1 , 22 , ..., 0 , 0 , 0 ],

[2 , 1 , 34 , ..., 0 , 0 , 0 ],

[2 , 1 , 34 , ..., 90 , 282, 10 ],

...,

[2 , 1 , 14 , ..., 0 , 0 , 0 ],

[2 , 1 , 66 , ..., 0 , 0 , 0 ],

[2 , 1 , 75 , ..., 0 , 0 , 0 ]])}定义优化器

# 学习率预热比例warmup_proportion = 0.02# 学习率learning_rate = 1e-4# 训练总步数num_training_steps = len(train_data_loader) * num_epochs# AdamW优化器参数epsilonadam_epsilon = 1e-6# AdamW优化器参数weight_decayweight_decay=0.01# 可视化log_writer = LogWriter('visualdl_log_dir')

lr_scheduler = LinearDecayWithWarmup(learning_rate, num_training_steps, warmup_proportion)# LayerNorm参数不参与weight_decaydecay_params = [

p.name for n, p in model.named_parameters() if not any(nd in n for nd in ["bias", "norm"])

]# 优化器AdamWoptimizer = paddle.optimizer.AdamW(

learning_rate=lr_scheduler,

beta1=0.9,

beta2=0.999,

epsilon=adam_epsilon,

parameters=model.parameters(),

weight_decay=weight_decay,

apply_decay_param_fun=lambda x: x in decay_params)定义模型评估函数

# 模型评估函数@paddle.no_grad()def evaluate(model, data_loader, tokenizer, min_target_length,max_target_length):

model.eval()

model = model._layers if isinstance(model, paddle.DataParallel) else model

res, gts = [], {}

tot = 0

for batch in tqdm(data_loader):

targets = batch['labels']

pred = model.generate(input_ids=batch['input_ids'],

attention_mask=batch['attention_mask'],

min_length=min_target_length,

max_length=max_target_length,

use_cache=True,

length_penalty=0.7,

decode_strategy='beam_search',

num_beams=5,

early_stopping=True)[0]

pred = pred.cpu().numpy() #print(pred.shape)

for i in range(pred.shape[0]):

res.append({'image_id':tot, 'caption': [array2str(pred[i])]})

gts[tot] = [array2str(targets[i])]

tot += 1

CiderD_scorer = CiderD(df='corpus', sigma=15)

cider_score, cider_scores = CiderD_scorer.compute_score(gts, res) print('cid',cider_score) return cider_score# 定义FGM对抗训练

class FGM:

def __init__(self, model, eps=1.):

self.model = (model.module if hasattr(model, "module") else model)

self.eps = eps

self.backup = {} # only attack embedding

def attack(self, emb_name='embedding'):

for name, param in self.model.named_parameters(): if param.stop_gradient and emb_name in name:

self.backup[name] = param.data.clone()

norm = paddle.norm(param.grad) if norm and not paddle.isnan(norm):

r_at = self.eps * param.grad / norm

param.data.add_(r_at) def restore(self, emb_name='embedding'):

for name, para in self.model.named_parameters(): if para.stop_gradient and emb_name in name: assert name in self.backup

para.data = self.backup[name]

self.backup = {}

# 初始化fgm = FGM(model)# 定义EMA,本次比赛尝试没有效果

# class ExponentialMovingAverage():# def __init__(self, model, decay, thres_steps=True):# self._model = model# self._decay = decay# self._thres_steps = thres_steps# self._shadow = {}# self._backup = {}# def register(self):# self._update_step = 0# for name, param in self._model.named_parameters():# if param.stop_gradient is False: # 只记录可训练参数。bn层的均值、方差的stop_gradient默认是True,所以不会记录bn层的均值、方差。# self._shadow[name] = param.numpy().copy()# def update(self):# for name, param in self._model.named_parameters():# if param.stop_gradient is False:# assert name in self._shadow# new_val = np.array(param.numpy().copy())# old_val = np.array(self._shadow[name])# decay = min(self._decay, (1 + self._update_step) / (10 + self._update_step)) if self._thres_steps else self._decay# new_average = decay * old_val + (1 - decay) * new_val# self._shadow[name] = new_average# self._update_step += 1# return decay# def apply(self):# for name, param in self._model.named_parameters():# if param.stop_gradient is False:# assert name in self._shadow# self._backup[name] = np.array(param.numpy().copy())# param.set_value(np.array(self._shadow[name]))# def restore(self):# for name, param in self._model.named_parameters():# if param.stop_gradient is False:# assert name in self._backup# param.set_value(self._backup[name])# self._backup = {}# ema = ExponentialMovingAverage(model, 0.9998)# ema.register()# 如果有训练好的模型参数,可以直接加载# state_dict = paddle.load('checkpoints2/model_state.pdparams')# model.set_dict(state_dict)global_step = 0tic_train0 = time.time()

tic_train = time.time()for epoch in range(num_epochs): for step, batch in enumerate(train_data_loader):

global_step += 1

# 模型前向训练,计算loss

lm_logits, _, loss = model(**batch)

loss.backward()

fgm.attack() # 在embedding上添加对抗扰动

lm_logits, _, loss = model(**batch)

loss.backward()

fgm.restore() # 恢复embedding参数

optimizer.step()

lr_scheduler.step()

optimizer.clear_grad() # ema.update()

if global_step % log_steps == 0:

logger.info('global step {}/{}, epoch: {}, loss: {}, lr: {}, speed: {} s/step, already: {}min, remain: {}min'.format(

global_step,

num_training_steps,

epoch, round(loss.item(),10), # 目前损失

round(optimizer.get_lr(),10), # 目前学习率

round((time.time() - tic_train)/log_steps,3), # 每步耗时

round((time.time() - tic_train0)/60,3), # 总耗时

round((num_training_steps-global_step)/log_steps*(time.time() - tic_train)/60),3)) # 预计耗时

tic_train = time.time() if global_step % eval_steps== 0 and global_step >= 0:

tic_eval = time.time()

score = evaluate(model, dev_data_loader, tokenizer,min_target_length, max_target_length)

logger.info("eval done total : %s s" % (time.time() - tic_eval)) if best_score < score:

best_score = score if paddle.distributed.get_rank() == 0: if not os.path.exists(save_dir):

os.makedirs(save_dir) # Need better way to get inner model of DataParallel

model_to_save = model._layers if isinstance(

model, paddle.DataParallel) else model

model_to_save.save_pretrained(save_dir)

tokenizer.save_pretrained(save_dir)# 模型评估函数@paddle.no_grad()def evaluate(model, data_loader, tokenizer, min_target_length,max_target_length):

model.eval()

model = model._layers if isinstance(model, paddle.DataParallel) else model

res, gts = [], {}

tot = 0

for batch in tqdm(data_loader):

targets = batch['labels']

pred = model.generate(input_ids=batch['input_ids'],

attention_mask=batch['attention_mask'],

min_length=min_target_length,

max_length=max_target_length,

use_cache=True,

length_penalty=0.7,

decode_strategy='beam_search',

num_beams=5,

early_stopping=True)[0]

pred = pred.cpu().numpy() for i in range(pred.shape[0]):

res.append({'image_id':tot, 'caption': [array2str(pred[i])]})

gts[tot] = [array2str(targets[i])]

tot += 1

CiderD_scorer = CiderD(df='corpus', sigma=15)

cider_score, cider_scores = CiderD_scorer.compute_score(gts, res) print('cid',cider_score) return cider_score# ema.apply()evaluate(model, dev_data_loader, tokenizer,min_target_length, max_target_length)# state_dict = model.state_dict()# paddle.save(state_dict, "paddle_dy_ema.pdparams")

# state_dict = paddle.load('checkpoints/model_state.pdparams')# model.set_dict(state_dict)# 模型评估函数@paddle.no_grad()def pre(model, data_loader, tokenizer, min_target_length,max_target_length):

model.eval()

all_preds = []

model = model._layers if isinstance(model, paddle.DataParallel) else model for batch in tqdm(data_loader, total=len(data_loader), desc="Eval step"):

labels = batch.pop('labels').numpy() # 模型生成

preds = model.generate(input_ids=batch['input_ids'],

attention_mask=batch['attention_mask'],

min_length=min_target_length,

max_length=max_target_length,

use_cache=True,

length_penalty=0.7,

decode_strategy='beam_search',

num_beams=5)[0] # tokenizer将id转为string

for i in range(len(preds)):

all_preds.append(array2str(preds[i])) # break

# print(all_preds, all_labels)

# CiderD_scorer = CiderD(df='corpus', sigma=15)

# cider_score = CiderD_scorer.compute_score(all_preds, all_labels)

# model.train()

return all_preds#rouge1, rouge2, rougel, bleu4pred = pre(model, test_data_loader, tokenizer, min_target_length, max_target_length)

df = pd.DataFrame(pred)

df[1] = df.index

df.columns = ['prediction','report_ID']

df[['report_ID','prediction']].to_csv('pre.csv',index=False,header=None)# 输出预测文件,下载提交即可<br/>

以上就是2023全球人工智能技术创新大赛-影像学NLP赛题baseline的详细内容,更多请关注php中文网其它相关文章!

每个人都需要一台速度更快、更稳定的 PC。随着时间的推移,垃圾文件、旧注册表数据和不必要的后台进程会占用资源并降低性能。幸运的是,许多工具可以让 Windows 保持平稳运行。

Copyright 2014-2025 https://www.php.cn/ All Rights Reserved | php.cn | 湘ICP备2023035733号