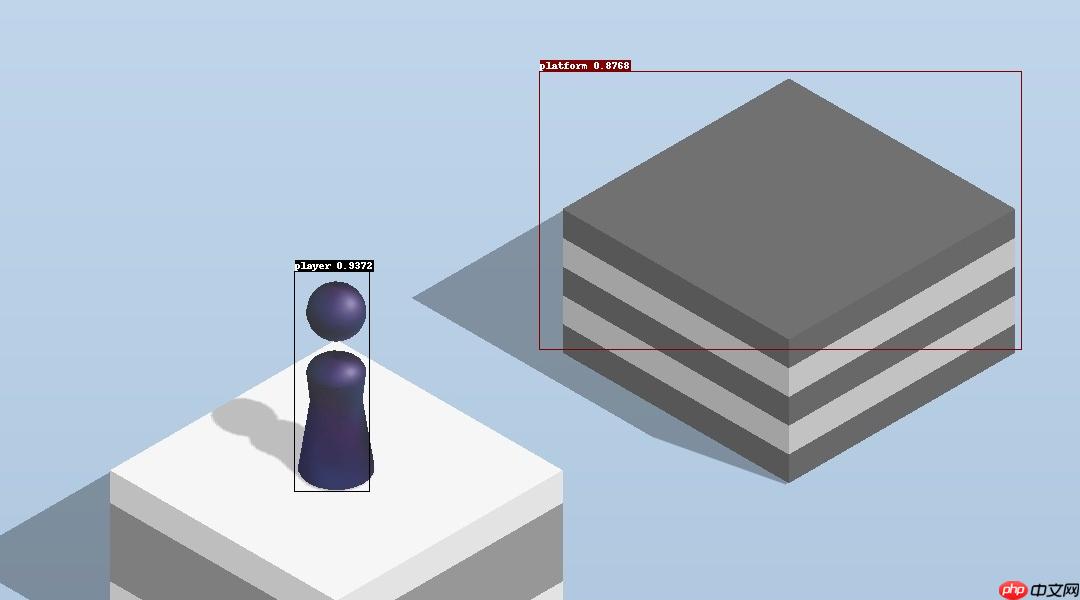

本文介绍了一个基于深度学习的跳一跳物理外挂制作过程。先准备PaddleDetection,自制199张图片数据集并标注,转成COCO格式。用PPYOLO_Tiny训练模型,经验证、导出后部署。电脑将坐标距离转成按压时间,通过上位机代码向51单片机发指令,单片机按指令操作,还提供了相关代码。

☞☞☞AI 智能聊天, 问答助手, AI 智能搜索, 免费无限量使用 DeepSeek R1 模型☜☜☜

单片机原理课的作业做了一个OpenCV+汇编51的没啥用的跳一跳物理外挂,觉得可以换成用深度学习进行目标检测,于是就来试一试

效果图:

效果动图:

#安装PaddleDetection!git clone https://gitee.com/paddlepaddle/PaddleDetection.git work/PaddleDetection

#安装前置!pip install --upgrade -r work/PaddleDetection/requirements.txt -i https://mirror.baidu.com/pypi/simple

#解压数据集!unzip -q 'data/data109105/JumpData V1.zip' -d data/ !mkdir data/JumpData !mkdir data/JumpData/Image !mkdir data/JumpData/Label !mkdir data/JumpData/Anno !mv data/DatasetId_230079_1631965091/*.json data/JumpData/Label !mv data/DatasetId_230079_1631965091/*.jpg data/JumpData/Image !rm -rf data/DatasetId_230079_1631965091 !rm -rf data/JumpData/Label/screen2.json

转成COCO格式的标注文件(这地方之前搞错了,训练半天精度上不去,后面才发现是我标注文件做错了)

#整理Json文件import osimport json

classlist = [{'supercategory': 'none', 'id': 1, 'name': 'player'},

{'supercategory': 'none', 'id': 2, 'name': 'platform'}]#trainidxcount = 0imgcountidx = 0JumpData_train = {}

imagelist_train = []

labellist_train = []for idx, imagename in enumerate(os.listdir('data/JumpData/Image')[0:179]):

imagelist_train.append({'file_name': imagename, 'height': 600, 'width': 1080, 'id': idx})

jsonname = os.path.splitext(imagename)[0] + '.json'

with open(os.path.join('data/JumpData/Label', jsonname)) as f:

data = json.load(f) for label in data['labels']:

x1 = label['x1']

y1 = label['y1']

w = label['x2'] - label['x1']

h = label['y2'] - label['y1']

area = (w * h) if label['name'] == 'platform':

classidx = 2

else:

classidx = 1

idxcount += 1

labellist_train.append({'area': area,

'iscrowd': 0,

'bbox': [x1, y1, w, h],

'category_id': classidx,

'ignore': 0,

'segmentation': [],

'image_id': imgcountidx,

'id': idxcount})

imgcountidx += 1#validxcount = 0imgcountidx = 0JumpData_val = {}

imagelist_val = []

labellist_val = []for idx, imagename in enumerate(os.listdir('data/JumpData/Image')[179:199]):

imagelist_val.append({'file_name': imagename, 'height': 600, 'width': 1080, 'id': idx})

jsonname = os.path.splitext(imagename)[0] + '.json'

with open(os.path.join('data/JumpData/Label', jsonname)) as f:

data = json.load(f) for label in data['labels']:

x1 = label['x1']

y1 = label['y1']

w = label['x2'] - label['x1']

h = label['y2'] - label['y1']

area = (w * h) if label['name'] == 'platform':

classidx = 2

else:

classidx = 1

idxcount += 1

labellist_val.append({'area': area,

'iscrowd': 0,

'bbox': [x1, y1, w, h],

'category_id': classidx,

'ignore': 0,

'segmentation': [],

'image_id': imgcountidx,

'id': idxcount})

imgcountidx += 1JumpData_train['images'] = imagelist_train

JumpData_train['type'] = 'instances'JumpData_train['annotations'] = labellist_train

JumpData_train['categories'] = classlist

JumpData_val['images'] = imagelist_val

JumpData_val['type'] = 'instances'JumpData_val['annotations'] = labellist_val

JumpData_val['categories'] = classlist

json.dump(JumpData_train, open('data/JumpData/Anno/JumpData_train.json', 'w'), indent=4)

json.dump(JumpData_val, open('data/JumpData/Anno/JumpData_val.json', 'w'), indent=4)使用PPYOLO_Tiny进行训练,配置文件请见work/Config/Yml文件夹

#模型训练!export CUDA_VISIBLE_DEVICES=0!python work/PaddleDetection/tools/train.py

-c work/Config/Yml/PPYOLO_TINY_JUMP.yml

--eval#模型预测!python work/PaddleDetection/tools/infer.py

-c work/Config/Yml/PPYOLO_TINY_JUMP.yml

-o weights=work/output/PPYOLO_TINY_JUMP/best_model.pdparams

--infer_img=work/Config/screen.png

--draw_threshold=0.5

--output_dir=work/imgresult#导出模型!python work/PaddleDetection/tools/export_model.py

-c work/Config/Yml/PPYOLO_TINY_JUMP.yml

-o weights=work/output/PPYOLO_TINY_JUMP/best_model.pdparams

--output_dir=work/inference_model#模型预测(导出后)!python work/PaddleDetection/deploy/python/infer.py

--model_dir=work/inference_model/PPYOLO_TINY_JUMP

--image_file=work/Config/screen.png

--output_dir=work/inferenceresultimport osimport cv2import timeimport serialimport paddlefrom Predict import PredictConfig, Detector, predict_imagedef getscreen():

os.system("adb shell rm /sdcard/screen.png")

os.system("del C:\Users\Blang\Desktop\screen.png")

os.system("adb shell screencap -p /sdcard/screen.png")

os.system("adb pull /sdcard/screen.png C:/Users/Blang/Desktop")

img = cv2.imread('C:/Users/Blang/Desktop/screen.png')

img = img[850:1450, :] return img

def getposition(image_file):

paddle.enable_static()

threshold = 0.5

model_dir = 'inference_model/PPYOLO_TINY_JUMP'

pred_config = PredictConfig(model_dir)

detector = Detector(pred_config, model_dir)

results = predict_image(detector, image_file, threshold) return results

def calculate(results):

player_position = []

platform_position = [] for result in results:

classid = result[0]

xmin = result[2]

ymin = result[3]

xmax = result[4]

ymax = result[5]

w = xmax - xmin

h = ymax - ymin if classid == 0:

player_x = xmin + w / 2

player_y = ymax

player_position.append([player_x, player_y]) else:

platform_x = xmin + w / 2

platform_y = ymax

platform_position.append([platform_x, platform_y]) for player in player_position: for platform in platform_position: if player[1] < platform[1]: continue

else:

player_x = player[0]

player_y = player[1]

platform_x = platform[0]

platform_y = platform[1]

distance = ((platform_x - player_x)**2 + (platform_y - player_y)**2)**0.5

presstime = distance * 1.5 + 200

presstime = presstime * 0.001

return presstimedef sendcommand(presstime):

ser=serial.Serial("COM5", 2400, timeout=5)

result=ser.write(chr(0x32).encode("utf-8"))

time.sleep(presstime)

result=ser.write(chr(0x31).encode("utf-8"))

ser.close()

time.sleep(1)

while True:

img = getscreen()

results = getposition(img)

presstime = calculate(results)

sendcommand(presstime) ORG 0000H

LJMP MAIN

ORG 000BH

LJMP Timer0Interrupt

ORG 0023H

LJMP UARTInterrupt

ORG 0100H

MAIN:

LCALL InitAll

LCALL InitTimer0

LCALL InitUART

LCALL PWM

LJMP MAIN

/***********************************/

InitAll:

MOV R7, #50D

MOV SP, #60H

MOV TMOD, #22H/***********************************/

InitTimer0:

MOV TH0, #0A4H

MOV TL0, #0A4H

SETB EA

SETB ET0

SETB TR0

SETB P3.5

SETB P3.6

RET

PWM:

MOV A, #200D

SUBB A, R1

MOV R2, A

MOV A, R1

MOV R0, A

DELAY:

CJNE R0, #00H, $

CLR P3.5

CLR P3.6

MOV A, R2

MOV R0, A

CJNE R0, #00H, $

SETB P3.5

SETB P3.6

MOV A, R1

MOV R0, A

DJNZ R7, DELAY

RET

Timer0Interrupt:

DEC R0

RETI

/***********************************/

InitUART:

MOV SCON, #50H

MOV TH1, #0F3H

MOV TL1, TH1

MOV PCON, #00H

SETB EA

SETB ES

SETB TR1

RET

UARTInterrupt:

CLR ES

PUSH ACC

JB RI, Is_Receive

RETI

Is_Receive:

CLR RI

MOV A, SBUF

CJNE A, #31H, NEXT_POSITION

MOV R1, #8D NEXT_POSITION:

CJNE A, #32H, GOBACK

MOV R1, #20DGOBACK:

SETB ES

POP ACC

RETI

/***********************************/

END以上就是【PaddleDetection】结合51单片机搭建微信跳一跳物理外挂的详细内容,更多请关注php中文网其它相关文章!

微信是一款手机通信软件,支持通过手机网络发送语音短信、视频、图片和文字。微信可以单聊及群聊,还能根据地理位置找到附近的人,带给大家全新的移动沟通体验,有需要的小伙伴快来保存下载体验吧!

Copyright 2014-2025 https://www.php.cn/ All Rights Reserved | php.cn | 湘ICP备2023035733号