本项目基于ResNet50,将其BottleneckBlock模块中conv2特征层的标准卷积替换为DCN可变性卷积,构建ResNet50-DCN模型,用于科大讯飞24类语音时序图谱分类。使用2143条训练样本、429条验证样本训练,90轮后验证集最高准确率79%,未深入调优,有提升空间,还包含数据处理、模型训练及推理等过程。

☞☞☞AI 智能聊天, 问答助手, AI 智能搜索, 免费无限量使用 DeepSeek R1 模型☜☜☜

本项目是基于 resnet50 对其 BottleneckBlock 模块中 conv2 特征层的标准卷积替换为 DCN(Deformable ConvNets V1) 可变性卷积,从而提升模型的泛化性。本项目只是实现了 resnet50-dcn 模型搭建与跑通,分类在验证集准确率最高79%,没有进行更多的调优,有提升可能性。

数据集是科大讯飞的语音时序图谱(已脱敏处理),24种不同种类,2000多条训练样本,1020张测试图片。 表述不当的地方,还请多多包涵指正。

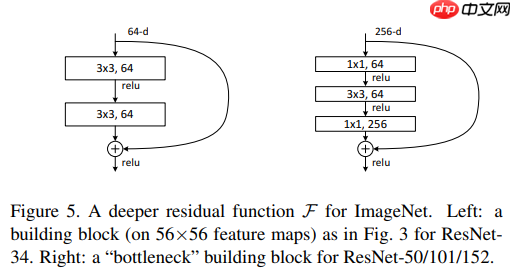

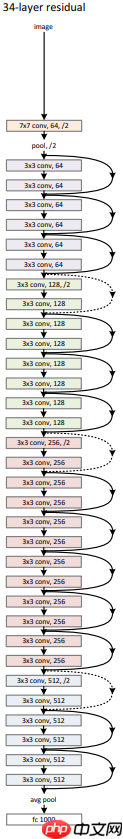

resnet50 是由 resnet34 将其基础残差块上图左替换为图右而得到。

机器视觉深度学习算法,即使很深,也能保持初心,还易于训练,得益于残差思想,弃其糟粕,取其精华,最终使自己更强大。实现方法以及原论文都在这里(飞桨API)。

注意:在计算交叉熵损失过程中使用softmax,故而resnet50 网络中没有softmax层,所以 output 不是 0~1 概率值。

背景

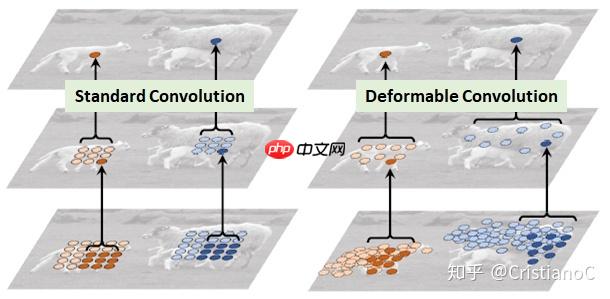

在计算机视觉领域,同一物体在不同场景,角度中未知的几何变换是检测/识别的一大挑战,通常的做法:

通过数据增广,尽可能多的获得不同场景下的样本以增强模型的适应能力。

设置一些针对几何变换不变的特征或者算法。

两种方法都有缺陷,第一种方法因为样本的局限性显然模型的泛化能力比较低,无法泛化到一般场景中,第二种方法则因为手工设计的不变特征和算法对于过于复杂的变换是很难的而无法设计。所以作者提出了Deformable Conv(可变形卷积)和 Deformable Pooling(可变形池化)来解决这个问题。 这里上传两张图片来形象展示一下,具体可参考其他大佬文章(参阅)。

在上面这张图里面,左边传统的卷积显然没有提取到完整绵羊的特征,而右边的可变形卷积则提取到了完整的不规则绵羊的特征。

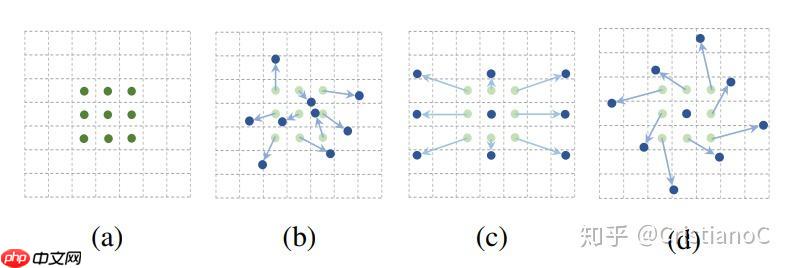

(a) 所示的正常卷积规律的采样 9 个点(绿点),(b)(c)(d) 为可变形卷积,在正常的采样坐标上加上一个位移量(蓝色箭头),其中 (c)(d) 作为 (b) 的特殊情况,展示了可变形卷积可以作为尺度变换,比例变换和旋转变换等特殊情况。

offset偏置卷积 kernel_size = [kw, kh], 形状[N, 2 * kw * kh, W, H],对原卷积进行x,y两个方向偏置,所以他的输出通道 2 * kw * kh。最终输出是图左边的亮绿色原始卷积核变为松散开来的蓝色卷积核(有点像空洞),然后再以新卷积进行计算。(个人理解:offset得到的是浮点型位置坐标,是由那一点的周围四个整型坐标加权得到,即一个点坐标是由 x,y 两个方向的偏置确定,可以看作用一组正交向量表示一个任意向量, 每个kernel总共有 kw * kh 个点,那就需要 2 * kw * kh个输出,总共有N个 kernel,所以形状为 [N, 2 * kw * kh, W, H]。)

可形变卷积 v1(mask = None)计算公式如下:

y(p)=∑k=1Kwk∗x(p+pk+Δpk)

其中

pk是标准卷积的第k个位置的坐标(固定不变的)

Δpk 和 Δmk 分别为第 k 个位置的可学习偏移和调制标量。在 deformable conv v1 中 Δmk 为 1(参考链接)。

# 解压数据!unzip -q -d /home/aistudio /home/aistudio/data/data155487/data.zip

# 导入依赖模块import paddleimport paddle.vision.transforms as Tfrom PIL import Imageimport timeimport os

import numpy as npimport matplotlib.pyplot as pltimport pandas as pd# 导入自定义模型import models

paddle.device.set_device("gpu:0") # 开启GPU训练ROOT_DIR = '/home/aistudio/data'读取并查看数据

data = pd.read_csv('data/train.csv')# 图片张数print('图片的张数:', data.shape[0])# 时序图的类别数classes_nums = data['label'].nunique()print('时序图的类别数:', classes_nums)# 查看标签列表classes = data['label']print('第一张图片标签', classes[0])# 图片路径列表img_paths = data['image']

img_path = os.path.join('data', data['image'][0])print('第一张图片路径:', img_path)图片的张数: 2143 时序图的类别数: 24 第一张图片标签 12 第一张图片路径: data/train_0.jpg

# 切分train和valid数据train_data = data[:int(classes.shape[0]*0.8)] valid_data = data[int(classes.shape[0]*0.8):]

valid_data['label']

# 读取图片方法def load_image(img_path):

img = Image.open(img_path).convert(mode='RGB') # 将图片转成RGB模式三通道模式

# img = img.resize(img.size)

img = img.resize((224,224)) # return np.array(img).astype('float32')

return np.array(img)训练集、验证集加载方法

# 创建数据集class MyDataset(paddle.io.Dataset):

def __init__(self, data, mode='train', valid_start=None):

super(MyDataset,self).__init__(),

self.data = data

self.mode = mode if self.mode == 'train':

self.transform = T.Compose([

T.BrightnessTransform(0.4), # 亮度调节(要求像素值为int类型)

T.ContrastTransform(0.4), # 对比度调节

T.HueTransform(0.4), # 色调

T.RandomErasing(), # 随机修剪

T.Normalize(mean=[123.675, 116.28, 103.53],

std=[58.395, 57.12, 57.375], data_format='HWC'), # 标准化

T.Transpose() # 数据格式转换,Transpose默认参数(2,0,1)

]) if self.mode == 'valid':

self.transform = T.Compose([

T.Normalize(mean=[123.675, 116.28, 103.53],

std=[58.395, 57.12, 57.375], data_format='HWC'),

T.Transpose()

])

self.valid_start = valid_start def __getitem__(self, index):

# 验证集索引处理

if self.mode == 'valid':

index = index + self.valid_start # 获取标签

label = self.data['label'][index] # 获取路径

img_path = os.path.join(ROOT_DIR, 'train', self.data['image'][index]) # 读取图片内容

image = load_image(img_path) # 图形增广

image = self.transform(image)

return image.astype('float32'), label def __len__(self):

return len(self.data)# 创建train,valid数据集train_dataset = MyDataset(train_data, mode='train') valid_dataset = MyDataset(valid_data, mode='valid', valid_start=int(classes.shape[0]*0.8))# 创建train,valid生成器,加速数据读取,设置batch size以及打乱训练数据的顺序train_loader = paddle.io.DataLoader(train_dataset, shuffle = True, batch_size = 32, drop_last=True) valid_loader = paddle.io.DataLoader(valid_dataset, shuffle = False, batch_size = 10, drop_last=False)

测试集加载方法

# 获取测试数据所有图片名称test_img_names = os.listdir('/home/aistudio/data/test')print(len(test_img_names))1020

# 按照数字顺序重新排序图片名称列表test_img_names_order = sorted(test_img_names, key=lambda x:int(x.split('.')[0].split('_')[-1]))# test_img_names_order# 创建测试数据集class TestDataset(paddle.io.Dataset):

def __init__(self, data, mode='test'):

super(TestDataset,self).__init__(),

self.data = data

self.mode = mode

self.transform = T.Compose([

T.Normalize(mean=[123.675, 116.28, 103.53],

std=[58.395, 57.12, 57.375], data_format='HWC'

), # 标准化

T.Transpose() # 数据格式转换,Transpose默认参数(2,0,1)

]) def __getitem__(self, index):

# 获取文件名

img_name = self.data[index] # 获取路径

img_path = os.path.join(ROOT_DIR, 'test', self.data[index]) # 读取图片内容

image = load_image(img_path) # 图形增广

image = self.transform(image)

return image.astype('float32'), img_name def __len__(self):

return len(self.data)# 测试集加载test_dataset = TestDataset(data=test_img_names_order,mode='test')

# 测试测试数据集,输出测试图片名称test_dataset[1][1]

'test_1.jpg'

# 加载 ResNet50-DCN 模型,并预训练resnet_net = models.resnet50(pretrained=True, num_classes=24)

# 高层API封装模型model = paddle.Model(resnet_net)# 创建优化器,配置学习率optim = paddle.optimizer.Adam(learning_rate= 1e-4,parameters = model.parameters())# 训练配置model.prepare(

optim,

loss=paddle.nn.CrossEntropyLoss(),

metrics=paddle.metric.Accuracy()

)

callback1 = paddle.callbacks.VisualDL(log_dir='visualdl_log_dir') # 可视化训练过程,提供给VisualDL监控机制# 开启训练model.fit(train_loader, valid_loader, epochs=90, eval_freq=2, verbose=1, callbacks=[callback1])The loss value printed in the log is the current step, and the metric is the average value of previous steps. Epoch 1/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 3.1927 - acc: 0.0419 - 503ms/step Eval begin... step 43/43 [==============================] - loss: 3.1498 - acc: 0.0466 - 111ms/step Eval samples: 429 Epoch 2/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 3.2429 - acc: 0.0383 - 458ms/step Epoch 3/90 step 53/53 [==============================] - loss: 3.2695 - acc: 0.0448 - 441ms/step Eval begin... step 43/43 [==============================] - loss: 3.3218 - acc: 0.0303 - 122ms/step Eval samples: 429 Epoch 4/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 3.1471 - acc: 0.0531 - 464ms/step Epoch 5/90 step 53/53 [==============================] - loss: 3.1612 - acc: 0.0513 - 466ms/step Eval begin... step 43/43 [==============================] - loss: 3.1134 - acc: 0.0723 - 105ms/step Eval samples: 429 Epoch 6/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 3.1925 - acc: 0.0643 - 462ms/step Epoch 7/90 step 53/53 [==============================] - loss: 3.1686 - acc: 0.0660 - 463ms/step Eval begin... step 43/43 [==============================] - loss: 2.5680 - acc: 0.0723 - 108ms/step Eval samples: 429 Epoch 8/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 3.1395 - acc: 0.0743 - 449ms/step Epoch 9/90 step 53/53 [==============================] - loss: 3.0175 - acc: 0.0784 - 485ms/step Eval begin... step 43/43 [==============================] - loss: 2.7788 - acc: 0.0956 - 107ms/step Eval samples: 429 Epoch 10/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 2.9871 - acc: 0.0949 - 463ms/step Epoch 11/90 step 53/53 [==============================] - loss: 2.8698 - acc: 0.1138 - 454ms/step Eval begin... step 43/43 [==============================] - loss: 2.8364 - acc: 0.0979 - 127ms/step Eval samples: 429 Epoch 12/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 2.8052 - acc: 0.1221 - 455ms/step Epoch 13/90 step 53/53 [==============================] - loss: 2.8459 - acc: 0.1616 - 459ms/step Eval begin... step 43/43 [==============================] - loss: 2.6274 - acc: 0.1259 - 105ms/step Eval samples: 429 Epoch 14/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 2.5441 - acc: 0.1887 - 468ms/step Epoch 15/90 step 53/53 [==============================] - loss: 2.3795 - acc: 0.2246 - 467ms/step Eval begin... step 43/43 [==============================] - loss: 1.9842 - acc: 0.2774 - 127ms/step Eval samples: 429 Epoch 16/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 2.3704 - acc: 0.2942 - 525ms/step Epoch 17/90 step 53/53 [==============================] - loss: 2.2671 - acc: 0.3290 - 486ms/step Eval begin... step 43/43 [==============================] - loss: 1.4051 - acc: 0.3287 - 109ms/step Eval samples: 429 Epoch 18/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 1.9189 - acc: 0.3809 - 478ms/step Epoch 19/90 step 53/53 [==============================] - loss: 1.8451 - acc: 0.4381 - 488ms/step Eval begin... step 43/43 [==============================] - loss: 1.2951 - acc: 0.4056 - 116ms/step Eval samples: 429 Epoch 20/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 1.6947 - acc: 0.5018 - 459ms/step Epoch 21/90 step 53/53 [==============================] - loss: 1.5430 - acc: 0.5389 - 470ms/step Eval begin... step 43/43 [==============================] - loss: 0.9600 - acc: 0.5618 - 113ms/step Eval samples: 429 Epoch 22/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 1.3332 - acc: 0.6197 - 485ms/step Epoch 23/90 step 53/53 [==============================] - loss: 1.1644 - acc: 0.6268 - 447ms/step Eval begin... step 43/43 [==============================] - loss: 0.6927 - acc: 0.6037 - 108ms/step Eval samples: 429 Epoch 24/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 1.0518 - acc: 0.6946 - 448ms/step Epoch 25/90 step 53/53 [==============================] - loss: 0.7262 - acc: 0.7329 - 472ms/step Eval begin... step 43/43 [==============================] - loss: 0.5536 - acc: 0.6993 - 104ms/step Eval samples: 429 Epoch 26/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 0.6846 - acc: 0.7406 - 447ms/step Epoch 27/90 step 53/53 [==============================] - loss: 0.6977 - acc: 0.7765 - 479ms/step Eval begin... step 43/43 [==============================] - loss: 0.5581 - acc: 0.7179 - 112ms/step Eval samples: 429 Epoch 28/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 0.5008 - acc: 0.8154 - 497ms/step Epoch 29/90 step 53/53 [==============================] - loss: 0.6462 - acc: 0.8438 - 458ms/step Eval begin... step 43/43 [==============================] - loss: 0.2971 - acc: 0.7086 - 102ms/step Eval samples: 429 Epoch 30/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 0.6442 - acc: 0.8526 - 466ms/step Epoch 31/90 step 53/53 [==============================] - loss: 0.4941 - acc: 0.8667 - 445ms/step Eval begin... step 43/43 [==============================] - loss: 0.2377 - acc: 0.7506 - 152ms/step Eval samples: 429 Epoch 32/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 0.3167 - acc: 0.9021 - 434ms/step Epoch 33/90 step 53/53 [==============================] - loss: 0.1696 - acc: 0.9116 - 466ms/step Eval begin... step 43/43 [==============================] - loss: 0.1650 - acc: 0.7646 - 109ms/step Eval samples: 429 Epoch 34/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 0.1703 - acc: 0.9074 - 467ms/step Epoch 35/90 step 53/53 [==============================] - loss: 0.1415 - acc: 0.9216 - 465ms/step Eval begin... step 43/43 [==============================] - loss: 0.3412 - acc: 0.7739 - 120ms/step Eval samples: 429 Epoch 36/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 0.2013 - acc: 0.9304 - 439ms/step Epoch 37/90 step 53/53 [==============================] - loss: 0.1673 - acc: 0.9287 - 456ms/step Eval begin... step 43/43 [==============================] - loss: 0.1123 - acc: 0.7669 - 103ms/step Eval samples: 429 Epoch 38/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 0.2036 - acc: 0.9499 - 475ms/step Epoch 39/90 step 53/53 [==============================] - loss: 0.1945 - acc: 0.9351 - 465ms/step Eval begin... step 43/43 [==============================] - loss: 0.8336 - acc: 0.7529 - 115ms/step Eval samples: 429 Epoch 40/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 0.1870 - acc: 0.9404 - 475ms/step Epoch 41/90 step 53/53 [==============================] - loss: 0.1277 - acc: 0.9611 - 451ms/step Eval begin... step 43/43 [==============================] - loss: 0.1676 - acc: 0.7669 - 116ms/step Eval samples: 429 Epoch 42/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 0.2235 - acc: 0.9493 - 443ms/step Epoch 43/90 step 53/53 [==============================] - loss: 0.1583 - acc: 0.9452 - 472ms/step Eval begin... step 43/43 [==============================] - loss: 0.6762 - acc: 0.7692 - 108ms/step Eval samples: 429 Epoch 44/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 0.2066 - acc: 0.9469 - 468ms/step Epoch 45/90 step 53/53 [==============================] - loss: 0.0378 - acc: 0.9399 - 455ms/step Eval begin... step 43/43 [==============================] - loss: 0.2462 - acc: 0.7646 - 113ms/step Eval samples: 429 Epoch 46/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 0.3767 - acc: 0.9564 - 486ms/step Epoch 47/90 step 53/53 [==============================] - loss: 0.1808 - acc: 0.9593 - 448ms/step Eval begin... step 43/43 [==============================] - loss: 0.1106 - acc: 0.7646 - 121ms/step Eval samples: 429 Epoch 48/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 0.1386 - acc: 0.9546 - 435ms/step Epoch 49/90 step 53/53 [==============================] - loss: 0.2941 - acc: 0.9640 - 494ms/step Eval begin... step 43/43 [==============================] - loss: 0.1883 - acc: 0.7599 - 116ms/step Eval samples: 429 Epoch 50/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 0.1425 - acc: 0.9617 - 457ms/step Epoch 51/90 step 53/53 [==============================] - loss: 0.0866 - acc: 0.9617 - 481ms/step Eval begin... step 43/43 [==============================] - loss: 0.2950 - acc: 0.7622 - 105ms/step Eval samples: 429 Epoch 52/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 0.1512 - acc: 0.9629 - 469ms/step Epoch 53/90 step 53/53 [==============================] - loss: 0.1060 - acc: 0.9752 - 446ms/step Eval begin... step 43/43 [==============================] - loss: 0.1060 - acc: 0.7949 - 111ms/step Eval samples: 429 Epoch 54/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 0.0280 - acc: 0.9758 - 492ms/step Epoch 55/90 step 53/53 [==============================] - loss: 0.0724 - acc: 0.9752 - 466ms/step Eval begin... step 43/43 [==============================] - loss: 0.5074 - acc: 0.7949 - 111ms/step Eval samples: 429 Epoch 56/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 0.0205 - acc: 0.9723 - 474ms/step Epoch 57/90 step 53/53 [==============================] - loss: 0.0429 - acc: 0.9705 - 466ms/step Eval begin... step 43/43 [==============================] - loss: 0.2588 - acc: 0.7762 - 113ms/step Eval samples: 429 Epoch 58/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 0.1691 - acc: 0.9723 - 467ms/step Epoch 59/90 step 53/53 [==============================] - loss: 0.1695 - acc: 0.9605 - 453ms/step Eval begin... step 43/43 [==============================] - loss: 0.3465 - acc: 0.7576 - 112ms/step Eval samples: 429 Epoch 60/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 0.2683 - acc: 0.9658 - 445ms/step Epoch 61/90 step 53/53 [==============================] - loss: 0.0321 - acc: 0.9634 - 447ms/step Eval begin... step 43/43 [==============================] - loss: 0.4822 - acc: 0.7576 - 105ms/step Eval samples: 429 Epoch 62/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 0.0861 - acc: 0.9664 - 486ms/step Epoch 63/90 step 53/53 [==============================] - loss: 0.0670 - acc: 0.9717 - 451ms/step Eval begin... step 43/43 [==============================] - loss: 0.1761 - acc: 0.7506 - 118ms/step Eval samples: 429 Epoch 64/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 0.0327 - acc: 0.9758 - 464ms/step Epoch 65/90 step 53/53 [==============================] - loss: 0.0834 - acc: 0.9735 - 448ms/step Eval begin... step 43/43 [==============================] - loss: 0.2367 - acc: 0.7436 - 103ms/step Eval samples: 429 Epoch 66/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 0.0353 - acc: 0.9782 - 462ms/step Epoch 67/90 step 53/53 [==============================] - loss: 0.2589 - acc: 0.9746 - 464ms/step Eval begin... step 43/43 [==============================] - loss: 0.4783 - acc: 0.7832 - 111ms/step Eval samples: 429 Epoch 68/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 0.0448 - acc: 0.9841 - 466ms/step Epoch 69/90 step 53/53 [==============================] - loss: 0.1902 - acc: 0.9776 - 457ms/step Eval begin... step 43/43 [==============================] - loss: 0.1164 - acc: 0.7622 - 104ms/step Eval samples: 429 Epoch 70/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 0.2499 - acc: 0.9746 - 465ms/step Epoch 71/90 step 53/53 [==============================] - loss: 0.1311 - acc: 0.9817 - 463ms/step Eval begin... step 43/43 [==============================] - loss: 0.2597 - acc: 0.7786 - 114ms/step Eval samples: 429 Epoch 72/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 0.0990 - acc: 0.9752 - 446ms/step Epoch 73/90 step 53/53 [==============================] - loss: 0.0124 - acc: 0.9705 - 480ms/step Eval begin... step 43/43 [==============================] - loss: 0.4437 - acc: 0.7413 - 120ms/step Eval samples: 429 Epoch 74/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 0.1568 - acc: 0.9717 - 458ms/step Epoch 75/90 step 53/53 [==============================] - loss: 0.1583 - acc: 0.9711 - 464ms/step Eval begin... step 43/43 [==============================] - loss: 0.2247 - acc: 0.7599 - 112ms/step Eval samples: 429 Epoch 76/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 0.1303 - acc: 0.9782 - 454ms/step Epoch 77/90 step 53/53 [==============================] - loss: 0.0870 - acc: 0.9717 - 454ms/step Eval begin... step 43/43 [==============================] - loss: 0.2439 - acc: 0.7622 - 115ms/step Eval samples: 429 Epoch 78/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 0.1416 - acc: 0.9735 - 486ms/step Epoch 79/90 step 53/53 [==============================] - loss: 0.0401 - acc: 0.9794 - 455ms/step Eval begin... step 43/43 [==============================] - loss: 0.5282 - acc: 0.7692 - 110ms/step Eval samples: 429 Epoch 80/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 0.0090 - acc: 0.9794 - 502ms/step Epoch 81/90 step 53/53 [==============================] - loss: 0.0869 - acc: 0.9699 - 458ms/step Eval begin... step 43/43 [==============================] - loss: 0.4070 - acc: 0.7506 - 105ms/step Eval samples: 429 Epoch 82/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 0.2644 - acc: 0.9658 - 445ms/step Epoch 83/90 step 53/53 [==============================] - loss: 0.0142 - acc: 0.9770 - 489ms/step Eval begin... step 43/43 [==============================] - loss: 0.4563 - acc: 0.7436 - 109ms/step Eval samples: 429 Epoch 84/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 0.1400 - acc: 0.9870 - 467ms/step Epoch 85/90 step 53/53 [==============================] - loss: 0.0161 - acc: 0.9705 - 458ms/step Eval begin... step 43/43 [==============================] - loss: 0.4269 - acc: 0.7622 - 110ms/step Eval samples: 429 Epoch 86/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 0.1201 - acc: 0.9853 - 479ms/step Epoch 87/90 step 53/53 [==============================] - loss: 0.0189 - acc: 0.9805 - 454ms/step Eval begin... step 43/43 [==============================] - loss: 0.9262 - acc: 0.7506 - 131ms/step Eval samples: 429 Epoch 88/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 0.1661 - acc: 0.9770 - 472ms/step Epoch 89/90 step 53/53 [==============================] - loss: 0.1646 - acc: 0.9752 - 463ms/step Eval begin... step 43/43 [==============================] - loss: 0.2383 - acc: 0.7786 - 111ms/step Eval samples: 429 Epoch 90/90

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:712: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance."

step 53/53 [==============================] - loss: 0.2614 - acc: 0.9776 - 448ms/step

model.save('checkpoint/resnet50-dcn')# 创建模型resnet50_dcn = models.resnet50(pretrained=False, num_classes=24)# 封装模型model = paddle.Model(resnet50_dcn)# 加载参数model.load("checkpoint/resnet50-dcn.pdparams")# 加载模型配置model.prepare()W0416 11:30:43.951839 11026 gpu_resources.cc:61] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 11.2, Runtime API Version: 11.2 W0416 11:30:43.956612 11026 gpu_resources.cc:91] device: 0, cuDNN Version: 8.2.

# 用 predict 在验证集上对模型进行预测test_result = model.predict(valid_dataset)# 由于模型是单一输出,test_result的形状为[1, 429, 24],429是测试数据集的数据量。# 这里打印第一个数据的结果,这个数组表示每个数字的预测得分# print(len(test_result))# print(test_result[0][0])

Predict begin... step 429/429 [==============================] - 24ms/step Predict samples: 429

len(valid_dataset)

429

# 验证集准确率# 转换字典可以提高计算效率# 预测标签pred_labels = dict((i,pred_label.argmax()) for i, pred_label in enumerate(test_result[0]))# 实际标签labels = dict((i, label) for i, label in enumerate(valid_data['label']))

Acc = 0for i in range(len(pred_labels)): if pred_labels[i] == labels[i]:

Acc += 1print("Acc:", Acc / len(pred_labels))Acc: 0.7668997668997669

将预测结果与真实标签进行比较,通过一致性来判断模型的观点的正误。

# 从验证集中取出一张图片,sample_n表示第几张,小于429sample_n = 219img, label = valid_dataset[sample_n]# 打印推理结果,这里的argmax函数用于取出预测值最高的一个的下标,作为预测标签pred_label = test_result[0][sample_n].argmax()print('true label: {}, pred label: {}'.format(label, pred_label))# 使用matplotlib库,可视化图片plt.imshow(img[0])true label: 9, pred label: 9

<matplotlib.image.AxesImage at 0x7f6c64137150>

<Figure size 640x480 with 1 Axes>

# 通过模型预测结果predicts = model.predict(test_data= test_dataset)

Predict begin... step 1020/1020 [==============================] - 24ms/step Predict samples: 1020

# predicts数据形状[1,1020,1,24],外层是 list[tuple[nparray]],获取得分最大的索引results = np.array(predicts).reshape((1020,24)).argmax(axis=1)

# 预测结果转列表,每个元素是一个类别pre_labels = list(results)

pre_results = zip(test_img_names_order, pre_labels)

pre_results = list(pre_results)# 将结果保存为csv文件df = pd.DataFrame(pre_results, columns=['image','label'])

df.to_csv('submit.csv',index=False)以上就是【AI达人特训营第三期】时频图分类项目的详细内容,更多请关注php中文网其它相关文章!

每个人都需要一台速度更快、更稳定的 PC。随着时间的推移,垃圾文件、旧注册表数据和不必要的后台进程会占用资源并降低性能。幸运的是,许多工具可以让 Windows 保持平稳运行。

Copyright 2014-2025 https://www.php.cn/ All Rights Reserved | php.cn | 湘ICP备2023035733号